Generative AI Ethics and Regulation: Navigating the Future Responsibly

Imran Khan

Imran Khan

As generative artificial intelligence becomes increasingly powerful and ubiquitous, society faces unprecedented ethical challenges and regulatory complexities. The same technology that can write poetry, create art, and solve complex problems can also spread misinformation, perpetuate bias, and infringe on privacy rights. How we address these challenges today will determine whether AI becomes a force for human flourishing or a source of societal division.

The stakes couldn't be higher. With generative AI systems now capable of producing content indistinguishable from human creation, we're grappling with questions that touch the very core of human society: What is truth in an age of synthetic media? How do we preserve human agency when algorithms can manipulate our perceptions? And who should have the power to govern these transformative technologies?

The Ethical Landscape: Key Challenges

Bias and Fairness

One of the most pressing ethical concerns surrounding generative AI is the perpetuation and amplification of societal biases. Research published in Science demonstrates that large language models exhibit significant biases related to race, gender, religion, and socioeconomic status, often reflecting and amplifying patterns present in their training data.

These biases manifest in various ways:

- Representational Harm: AI systems that systematically underrepresent or misrepresent certain groups

- Stereotypical Associations: Models that reinforce harmful stereotypes about professions, capabilities, or characteristics

- Cultural Bias: Systems trained primarily on Western data that fail to understand or respect non-Western perspectives

- Historical Bias: Models that perpetuate outdated social norms and discriminatory practices

A comprehensive study by Stanford University found that even when researchers actively attempted to debias AI models, subtle forms of discrimination persisted, suggesting that addressing bias requires ongoing vigilance and sophisticated technical approaches.

Privacy and Data Protection

Generative AI systems require vast amounts of data for training, raising significant privacy concerns. The Washington Post's investigation revealed that many AI companies have scraped personal information from social media platforms, online forums, and other digital spaces without explicit consent from users.

Privacy challenges include:

- Training Data Privacy: Personal information inadvertently included in training datasets

- Model Memorization: AI systems that can reproduce specific details from training data

- Inference Privacy: The risk of personal information being deduced from AI interactions

- Consent and Control: Limited user control over how their data is used in AI development

Misinformation and Deepfakes

The ability of generative AI to create convincing fake content poses serious threats to information integrity. Reuters' analysis of the 2024 election cycle documented over 500 instances of AI-generated misinformation being shared on social media platforms, reaching millions of users.

The challenges are multifaceted:

- Synthetic Media: Convincing fake videos, audio recordings, and images

- Automated Disinformation: Large-scale generation of misleading content

- Erosion of Trust: Difficulty distinguishing between authentic and synthetic content

- Malicious Use: Use of AI for harassment, fraud, and political manipulation

Global Regulatory Responses

The European Union: Leading with Comprehensive Regulation

The EU has positioned itself as a global leader in AI regulation with the European AI Act, which came into effect in 2024. This comprehensive legislation establishes a risk-based approach to AI governance, with specific provisions for generative AI systems.

Key provisions include:

- High-Risk System Requirements: Strict oversight for AI systems used in critical applications

- Foundation Model Obligations: Special requirements for large generative AI models

- Transparency Mandates: Requirements to disclose AI-generated content

- Prohibited Practices: Bans on AI systems that pose unacceptable risks to human rights

The Brookings Institution analysis suggests that the EU's approach could become a global standard, similar to how GDPR influenced privacy regulations worldwide.

United States: A Patchwork Approach

The U.S. has taken a more fragmented approach to AI regulation, with initiatives across federal agencies and state governments. President Biden's Executive Order on AI represents the most comprehensive federal action to date, establishing safety and security standards for AI development.

Key U.S. initiatives include:

- NIST AI Risk Management Framework: Voluntary guidelines for AI development and deployment

- Federal AI Use Standards: Requirements for government use of AI systems

- Research and Development Investments: Funding for AI safety and alignment research

- International Cooperation: Efforts to establish global AI governance norms

Asia-Pacific: Diverse Approaches

Asian countries are pursuing varied regulatory strategies. The East Asia Forum's comparative analysis shows significant differences in approaches:

- China: Strict content controls and algorithmic accountability requirements

- Japan: Industry self-regulation and international cooperation focus

- Singapore: Sandbox approaches for testing AI governance frameworks

- South Korea: Sector-specific regulations and AI ethics guidelines

Industry Self-Regulation and Standards

Corporate Responsibility Initiatives

Major AI companies have developed internal governance frameworks and ethical guidelines. OpenAI's safety policies, Google's AI principles, and Anthropic's safety research represent industry efforts to self-regulate.

Common elements include:

- Red Team Testing: Systematic attempts to find vulnerabilities and harmful outputs

- Content Filtering: Technical measures to prevent harmful content generation

- Use Case Restrictions: Policies limiting certain applications of AI technology

- Transparency Reports: Regular disclosures about AI system capabilities and limitations

Multi-Stakeholder Initiatives

The Partnership on AI and similar organizations bring together companies, researchers, and civil society groups to develop best practices and standards. These collaborative efforts are creating industry-wide norms for responsible AI development.

Technical Approaches to Ethical AI

Alignment and Safety Research

Researchers are developing technical methods to ensure AI systems behave in accordance with human values. Recent advances in AI alignment research include:

- Constitutional AI: Training models to follow explicit principles and values

- Reinforcement Learning from Human Feedback (RLHF): Using human preferences to guide AI behavior

- Interpretability Research: Understanding how AI systems make decisions

- Robustness Testing: Ensuring AI systems perform safely across diverse scenarios

Detection and Mitigation Tools

Technical solutions for identifying and addressing AI-generated content are rapidly evolving:

- Watermarking: Embedding invisible markers in AI-generated content

- Detection Algorithms: AI systems trained to identify synthetic content

- Provenance Tracking: Blockchain and cryptographic methods to verify content origin

- Real-time Monitoring: Systems that can detect and flag suspicious content at scale

MIT's latest research shows promising results for watermarking techniques that remain robust even when content is modified or compressed.

Societal and Economic Implications

Impact on Employment and Labor

Generative AI's effect on the job market raises important questions about economic justice and social stability. OECD research suggests that while AI may displace certain jobs, it could also create new opportunities and increase productivity in others.

Key considerations include:

- Job Displacement: Certain roles, particularly in content creation and analysis, face automation risks

- Skill Requirements: Changing demands for human skills in an AI-augmented economy

- Economic Inequality: Potential for AI to exacerbate existing disparities

- Transition Support: Need for retraining and social safety net programs

Democratic and Social Implications

The widespread adoption of generative AI raises fundamental questions about democratic governance and social cohesion. Political scientists argue that AI's ability to influence public opinion and shape information landscapes poses new challenges to democratic institutions.

Emerging Governance Models

Adaptive Regulation

Given the rapid pace of AI development, traditional regulatory approaches may be too slow and inflexible. Harvard's governance research advocates for adaptive regulatory frameworks that can evolve with technology.

Key principles include:

- Regulatory Sandboxes: Safe spaces for testing new AI applications

- Iterative Policy Development: Regular updates to regulations based on empirical evidence

- Multi-Stakeholder Governance: Involving diverse voices in regulatory decision-making

- Risk-Based Approaches: Focusing oversight on higher-risk applications

International Cooperation

AI governance increasingly requires international coordination. The UN AI Advisory Body and similar initiatives are working to establish global norms and standards for AI development and deployment.

Best Practices for Responsible Development

For AI Developers

- Ethical Design: Incorporate ethical considerations from the earliest stages of development

- Diverse Teams: Ensure development teams reflect the diversity of users and affected communities

- Rigorous Testing: Conduct comprehensive evaluations for bias, safety, and robustness

- Stakeholder Engagement: Involve affected communities in the development process

- Transparency: Provide clear information about system capabilities and limitations

For Organizations Deploying AI

- Due Diligence: Thoroughly evaluate AI systems before deployment

- Human Oversight: Maintain meaningful human control over critical decisions

- Monitoring Systems: Implement ongoing monitoring for bias and harmful outcomes

- Feedback Mechanisms: Create channels for users to report problems and concerns

- Regular Audits: Conduct periodic assessments of AI system performance and impact

The Path Forward: Building Trustworthy AI

Emerging Consensus Principles

Despite diverse approaches, a global consensus is emerging around core principles for responsible AI:

- Human-Centered Design: AI systems should enhance rather than replace human agency

- Fairness and Non-Discrimination: AI should not perpetuate or amplify bias

- Transparency and Explainability: Users should understand how AI systems work and make decisions

- Privacy and Data Protection: Personal information should be protected and used responsibly

- Accountability: Clear responsibility for AI system outcomes

- Safety and Security: AI systems should be robust and secure against misuse

Future Challenges and Opportunities

As generative AI continues to evolve, new ethical and regulatory challenges will emerge:

- Artificial General Intelligence: Preparing for potentially more powerful AI systems

- Cross-Border Governance: Managing AI in an interconnected global economy

- Democratic Participation: Ensuring public input in AI governance decisions

- Long-term Impact Assessment: Understanding AI's effects on human society and culture

Conclusion: Shaping a Responsible AI Future

The ethical and regulatory challenges surrounding generative AI are not merely technical problems to be solved—they are fundamental questions about the kind of society we want to build. How we address these challenges will determine whether AI becomes a tool for human empowerment or a source of inequality and harm.

Success will require unprecedented cooperation between technologists, policymakers, ethicists, and civil society. We need regulatory frameworks that are both protective and innovation-friendly, technical solutions that embed human values, and governance structures that ensure broad participation in decisions about AI's role in society.

The window for shaping AI's trajectory is narrowing as these technologies become more powerful and pervasive. The choices we make today about ethics, regulation, and governance will echo through the decades to come. By acting thoughtfully and collaboratively, we can work toward a future where generative AI enhances human flourishing while respecting our values, rights, and dignity.

The path forward is not predetermined. It's up to all of us—developers, users, policymakers, and citizens—to ensure that the age of generative AI becomes a chapter of human progress rather than a cautionary tale about technology without wisdom.

Featured Tools

Nectar AI

Dive into immersive roleplay adventures and personalized scenarios tailored exactly to your desires.

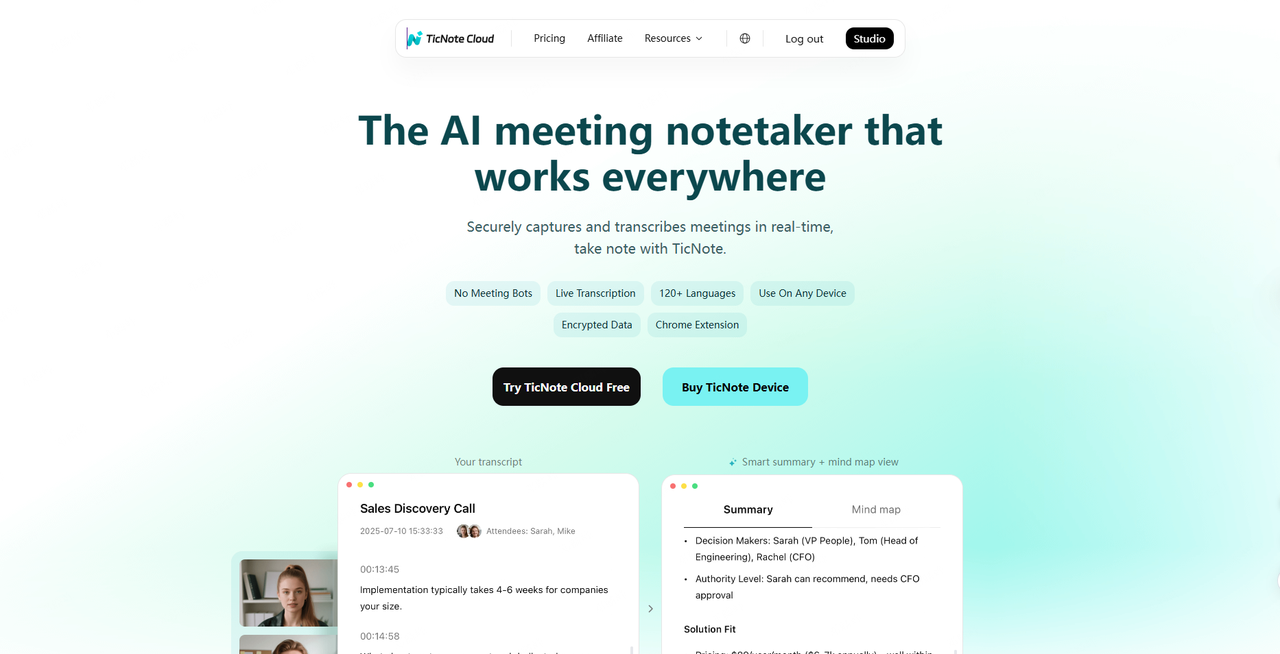

TicNote Cloud

From real-time meeting transcription to multilingual summaries and knowledge insights, our AI note taker helps you organize, share, and never miss what matters.

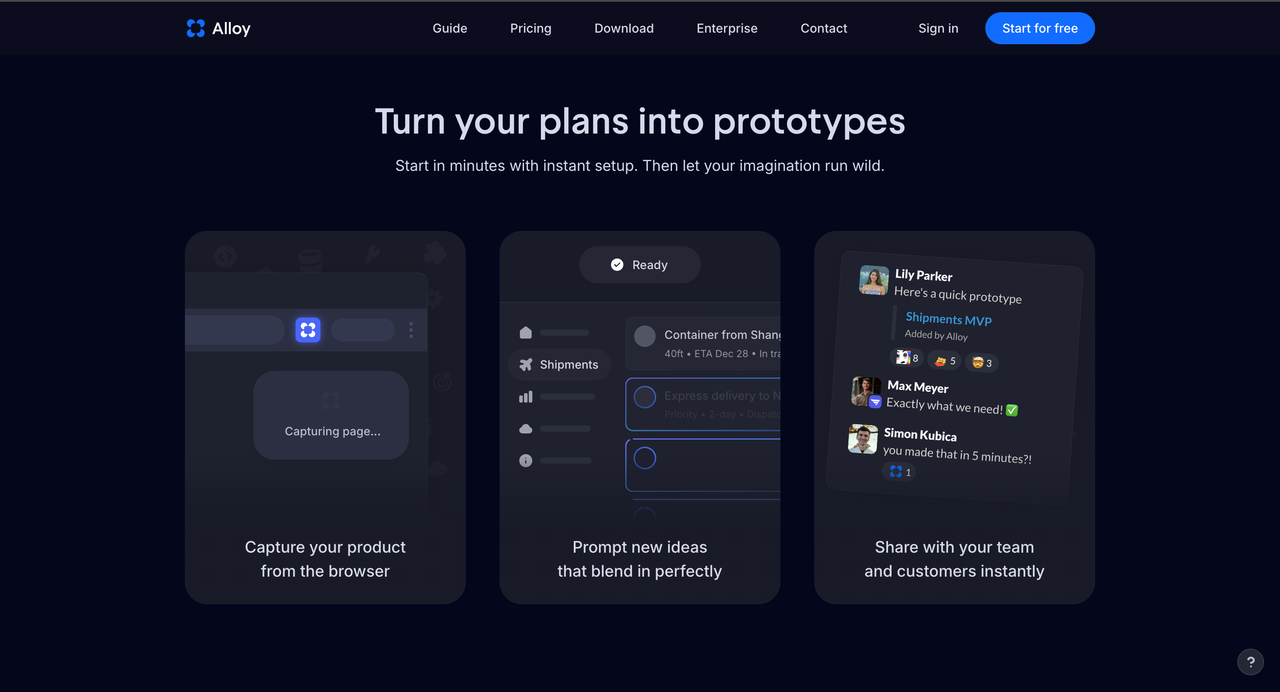

Alloy

Alloy AI Prototyping